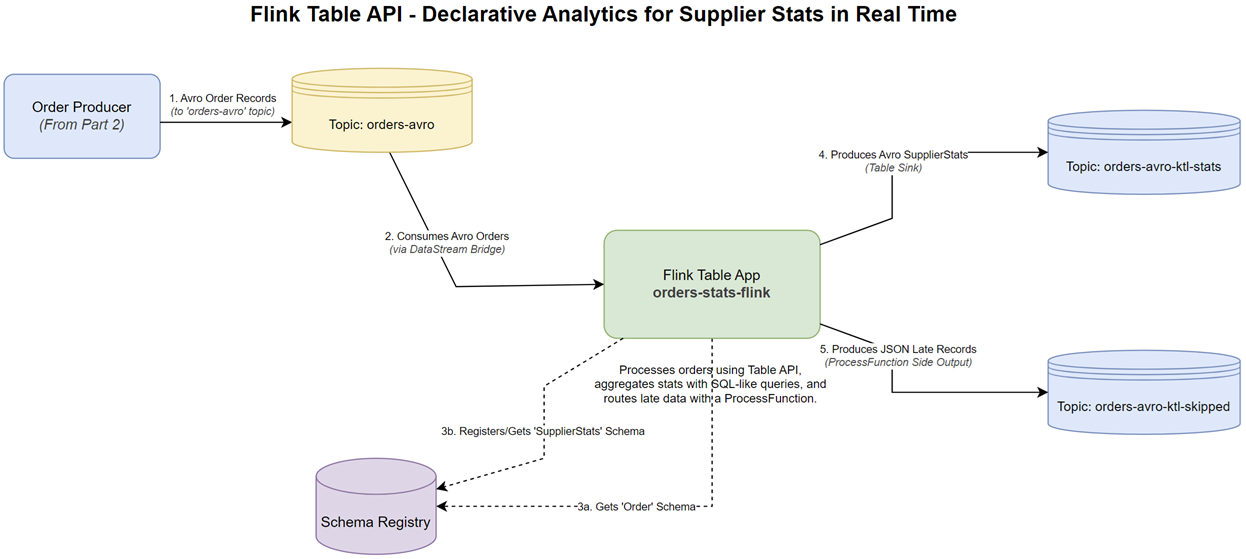

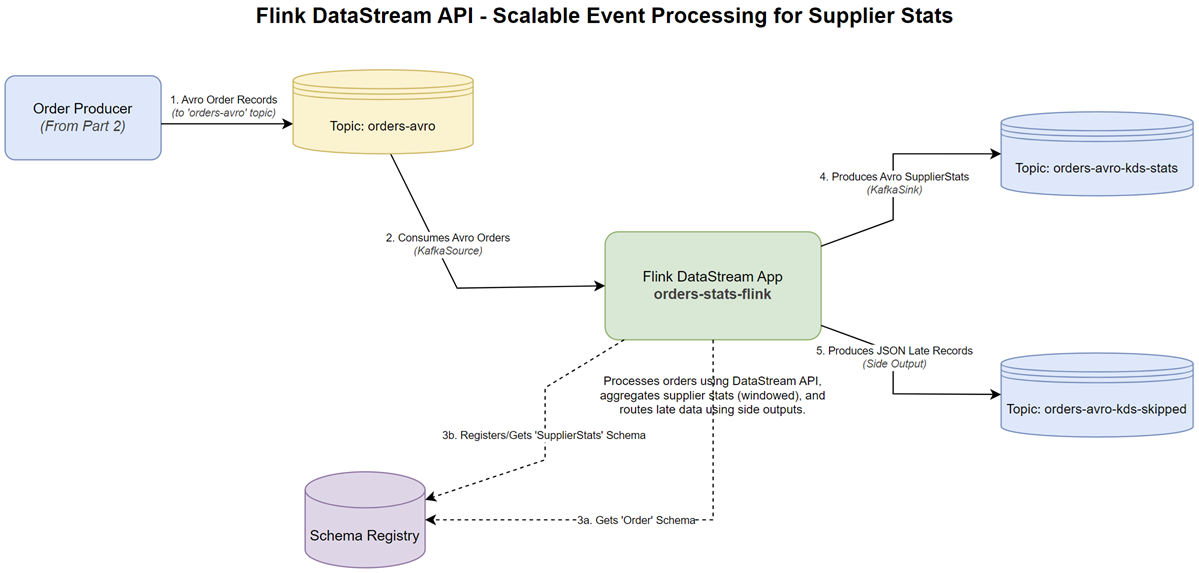

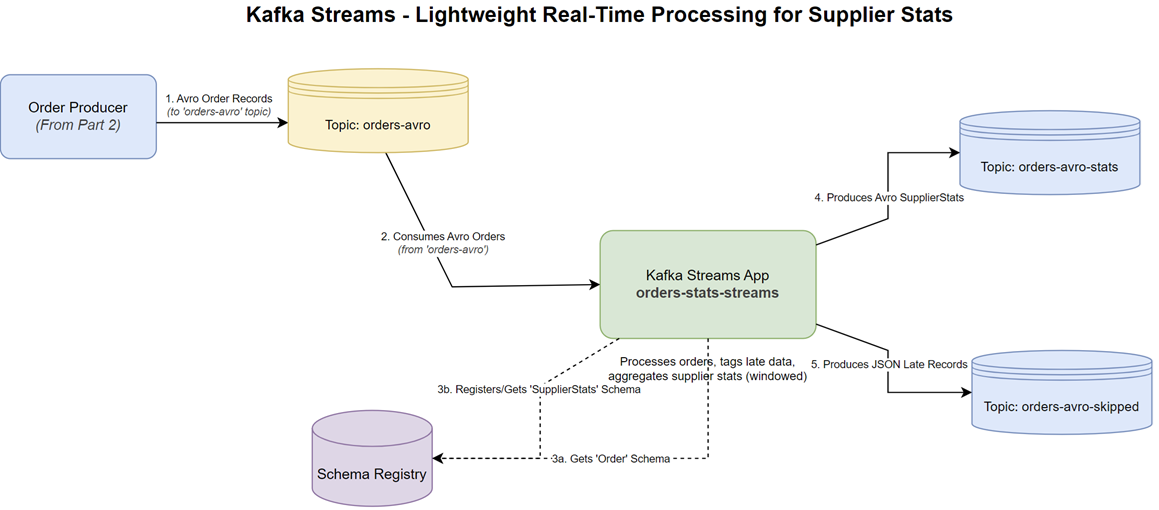

A couple of years ago, I read Stream Processing with Apache Flink and worked through the examples using PyFlink. While the book offered a solid introduction to Flink, I frequently hit limitations with the Python API, as many features from the book weren’t supported. This time, I decided to revisit the material, but using Kotlin. The experience has been much more rewarding and fun.

In porting the examples to Kotlin, I also took the opportunity to align the code with modern Flink practices. The complete source for this post is available in the stream-processing-with-flink directory of the flink-demos GitHub repository.