Accroding to the project GitHub repository,

Cronicle is a multi-server task scheduler and runner, with a web based front-end UI. It handles both scheduled, repeating and on-demand jobs, targeting any number of slave servers, with real-time stats and live log viewer.

By default, Cronicle is configured to launch a single master server - task scheduling is controlled by the master server. For high availability, it is important that another server takes the role of master when the existing master server fails.

In this post, multi-server configuration of Cronicle will be demonstrated with Docker and Nginx as load balancer. Specifically a single master and backup server will be set up and they will be served behind a load balancer - backup server is a slave server that can take the role of master when the master is not avaialble.

The source of this post can be found here.

Cronicle Docker Image

There isn’t an official Docker image for Cronicle. I just installed it from python:3.6 image. The docker file can be found as following.

1FROM python:3.6

2

3ARG CRONICLE_VERSION=v0.8.28

4ENV CRONICLE_VERSION=${CRONICLE_VERSION}

5

6# Node

7RUN curl -sL https://deb.nodesource.com/setup_8.x | bash - \

8 && apt-get install -y nodejs \

9 && curl -sL https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add - \

10 && echo "deb https://dl.yarnpkg.com/debian/ stable main" | tee /etc/apt/sources.list.d/yarn.list \

11 && apt-get update && apt-get install -y yarn

12

13# Cronicle

14RUN curl -s "https://raw.githubusercontent.com/jhuckaby/Cronicle/${CRONICLE_VERSION}/bin/install.js" | node \

15 && cd /opt/cronicle \

16 && npm install aws-sdk

17

18EXPOSE 3012

19EXPOSE 3014

20

21ENTRYPOINT ["/docker-entrypoint.sh"]

As Cronicle is written in Node.js, it should be installed as well. aws-sdk is not required strictly but it is added to test S3 integration later. Port 3012 is the default web port of Cronicle and 3014 is used for server auto-discovery via UDP broadcast - it may not be required.

docker-entrypoint.sh is used to start a Cronicle server. For master, one more step is necessary, which is initializing the storage system. An environment variable (IS_MASTER) will be used to control storage initialization.

1#!/bin/bash

2

3set -e

4

5if [ "$IS_MASTER" = "0" ]

6then

7 echo "Running SLAVE server"

8else

9 echo "Running MASTER server"

10 /opt/cronicle/bin/control.sh setup

11fi

12

13/opt/cronicle/bin/control.sh start

14

15while true;

16do

17 sleep 30;

18 /opt/cronicle/bin/control.sh status

19done

A custom docker image, cronicle-base, is built using as following.

1docker build -t=cronicle-base .

Load Balancer

Nginx is used as a load balancer. The config file can be found as following. It listens port 8080 and passes a request to cronicle1:3012 or cronicle2:3012.

1events { worker_connections 1024; }

2

3http {

4 upstream cronicles {

5 server cronicle1:3012;

6 server cronicle2:3012;

7 }

8

9 server {

10 listen 8080;

11

12 location / {

13 proxy_pass http://cronicles;

14 proxy_set_header Host $host;

15 }

16 }

17}

In order for Cronicle servers to be served behind the load balancer, the following changes are made (complete config file can be found here).

1{

2 "base_app_url": "http://loadbalancer:8080",

3

4 ...

5

6 "web_direct_connect": true,

7

8 ...

9}

First, base_app_url should be changed to the load balancer URL instead of an individual server’s URL. Secondly web_direct_connect should be changed to true. According to the project repository,

If you set this parameter (web_direct_connect) to true, then the Cronicle web application will connect directly to your individual Cronicle servers. This is more for multi-server configurations, especially when running behind a load balancer with multiple backup servers. The Web UI must always connect to the master server, so if you have multiple backup servers, it needs a direct connection.

Launch Servers

Docke Compose is used to launch 2 Cronicle servers (master and backup) and a load balancer. The service cronicle1 is for the master while cronicle2 is for the backup server. Note that both servers should have the same configuration file (config.json). Also, as the backup server will take the role of master, it should have access to the same data - ./backend/cronicle/data is mapped to both the servers. (Cronicle supports S3 or Couchbase as well.)

1version: '3.2'

2

3services:

4 loadbalancer:

5 container_name: loadbalancer

6 hostname: loadbalancer

7 image: nginx

8 volumes:

9 - ./loadbalancer/nginx.conf:/etc/nginx/nginx.conf

10 tty: true

11 links:

12 - cronicle1

13 ports:

14 - 8080:8080

15 cronicle1:

16 container_name: cronicle1

17 hostname: cronicle1

18 image: cronicle-base

19 #restart: always

20 volumes:

21 - ./sample_conf/config.json:/opt/cronicle/conf/config.json

22 - ./sample_conf/emails:/opt/cronicle/conf/emails

23 - ./docker-entrypoint.sh:/docker-entrypoint.sh

24 - ./backend/cronicle/data:/opt/cronicle/data

25 entrypoint: /docker-entrypoint.sh

26 environment:

27 IS_MASTER: "1"

28 cronicle2:

29 container_name: cronicle2

30 hostname: cronicle2

31 image: cronicle-base

32 #restart: always

33 volumes:

34 - ./sample_conf/config.json:/opt/cronicle/conf/config.json

35 - ./sample_conf/emails:/opt/cronicle/conf/emails

36 - ./docker-entrypoint.sh:/docker-entrypoint.sh

37 - ./backend/cronicle/data:/opt/cronicle/data

38 entrypoint: /docker-entrypoint.sh

39 environment:

40 IS_MASTER: "0"

It can be started as following.

1docker-compose up -d

Add Backup Server

Once started, Cronicle web app will be accessible at http://localhost:8080 and it’s possible to log in as the admin user - username and password are all admin.

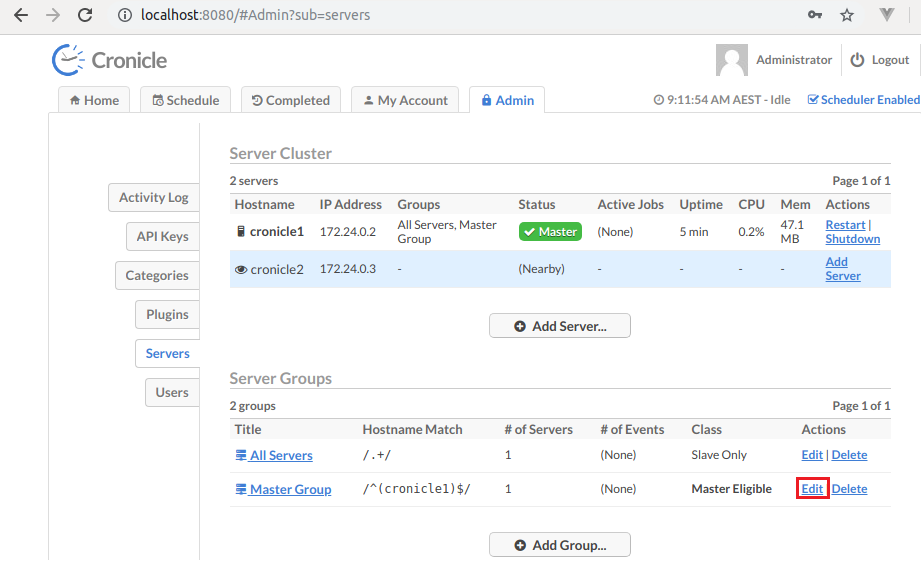

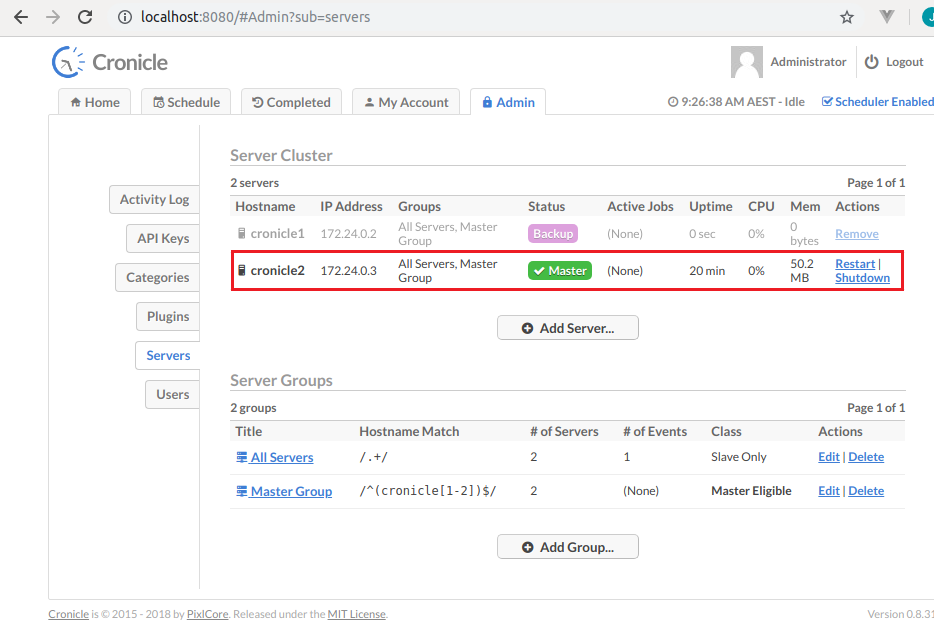

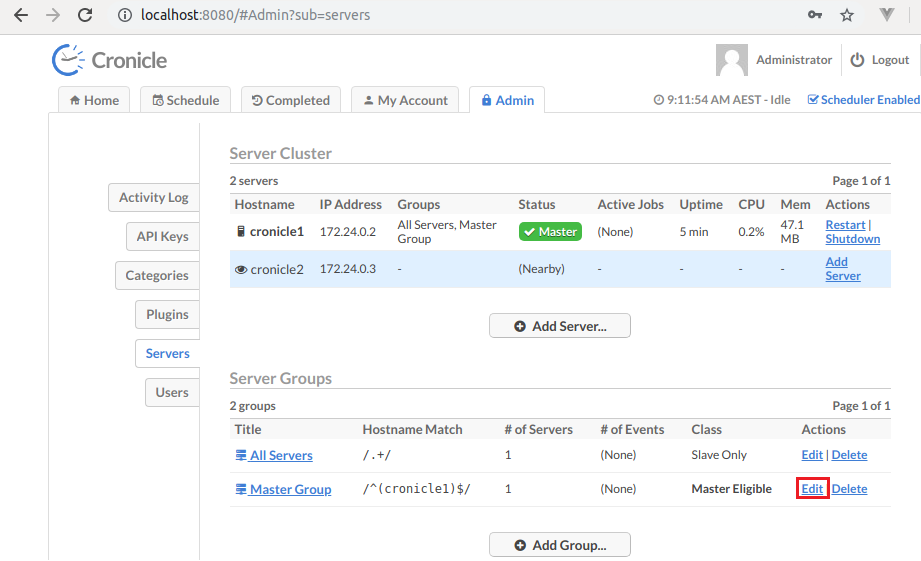

In Admin > Servers, it’s possible to see that the 2 Cronicle servers are shown. The master server is recognized as expected but the backup server (cronicle2) is not yet added.

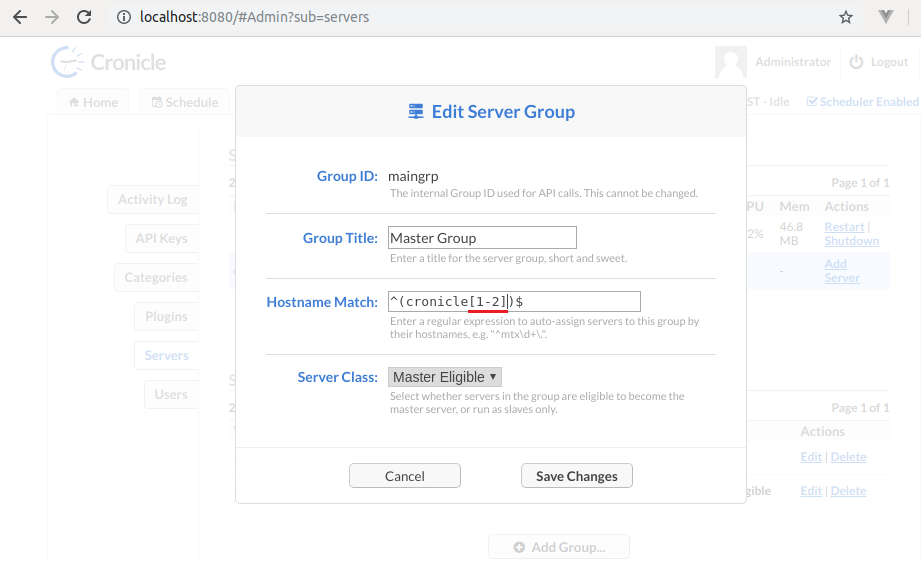

By default, 2 server groups (All Servers and Master Group) are created and the backup server should be added to the Master Group. To do so, the Hostname Match regular expression is modified as following: ^(cronicle[1-2])$.

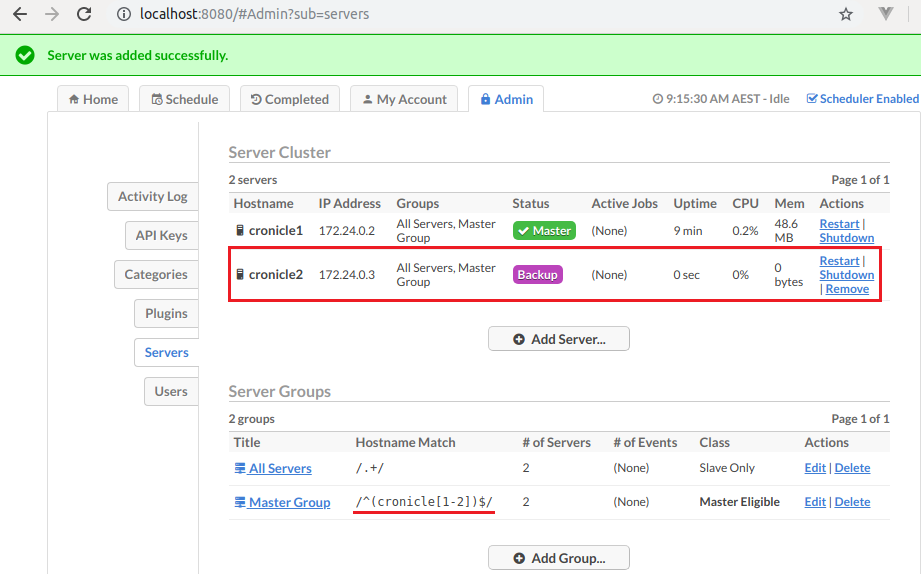

Then it can be shown that the backup server is recognized correctly.

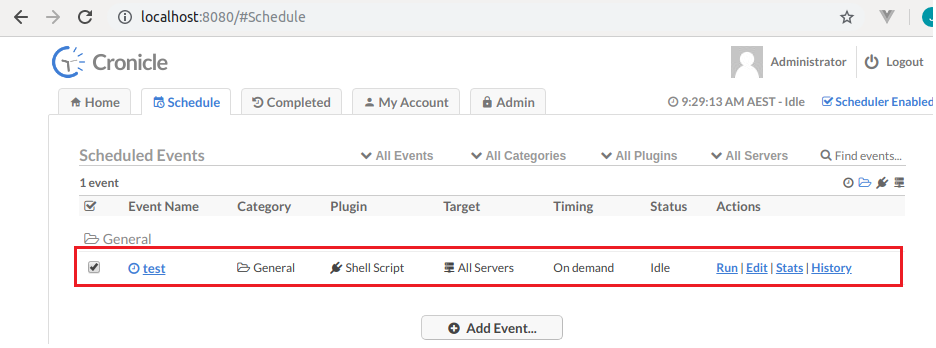

Create Event

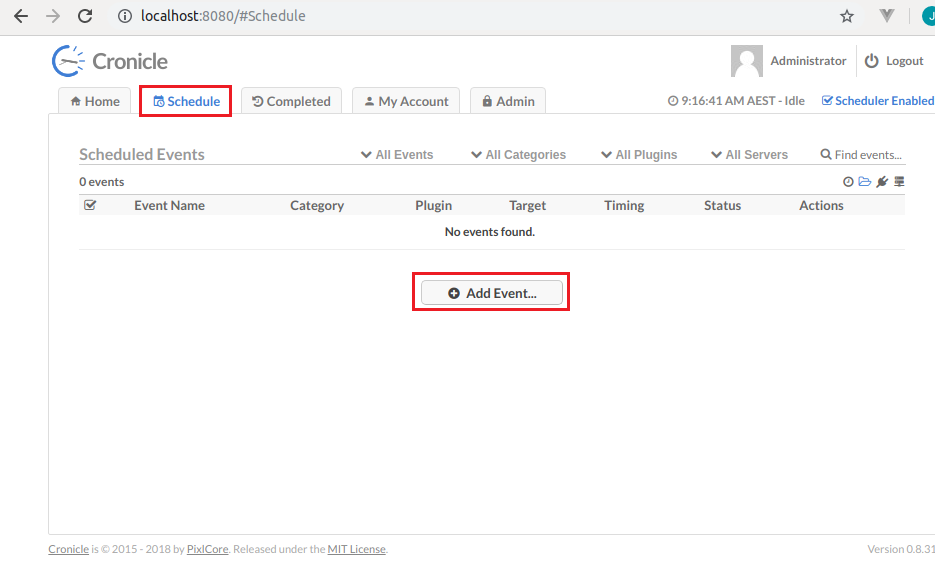

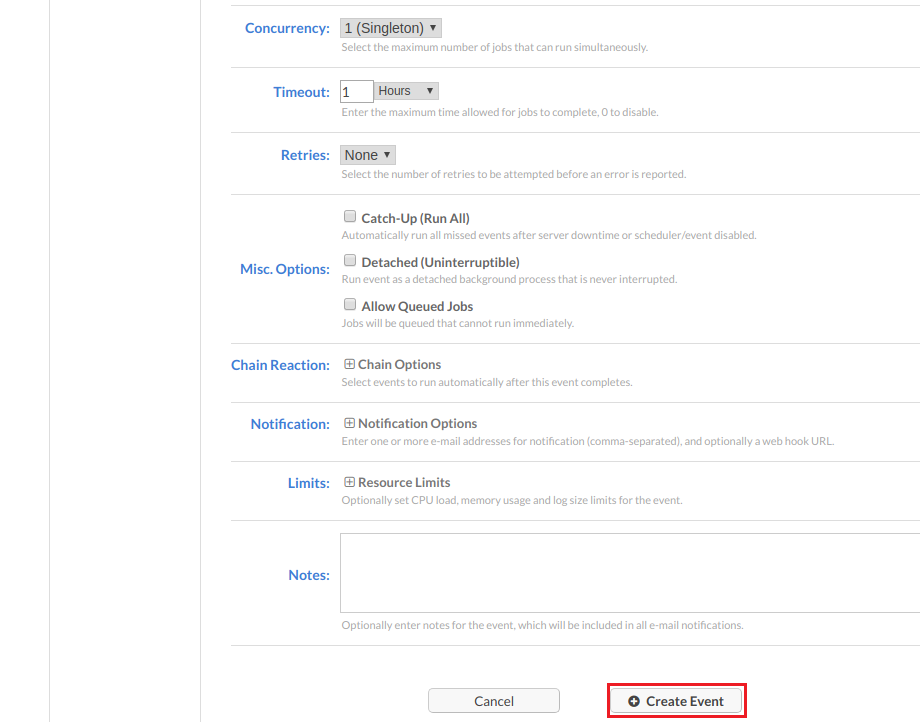

A test event is created in order to show that an event that’s created in the original master can be available in the backup server when it takes the role of master.

Cronicle has a web UI so that it is easy to manage/monitor scheduled events. It also has management API that many jobs can be performed programmatically. Here an event that runs a simple shell script is created.

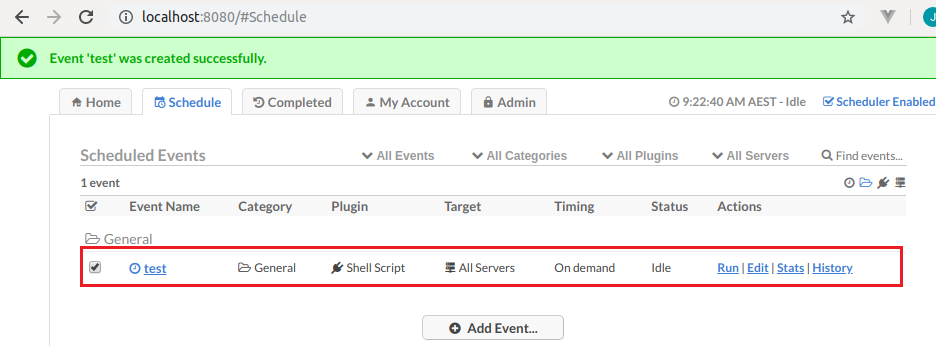

Once created, it is listed in Schedule tab.

Backup Becomes Master

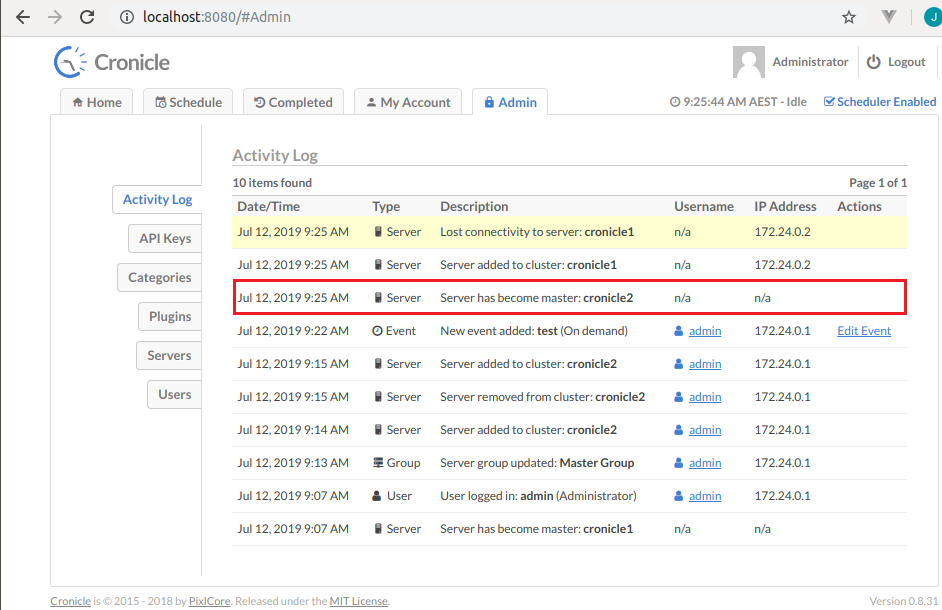

As mentioned earlier, the backup server will take the role of master when the master becomes unavailable. In order to see this, I removed the master server as following.

1docker-compose rm -f cronicle1

After a while, it’s possible to see that the backup server becomes master.

It can also be checked in Admin > Activity Log.

In Schedule, the test event can be found.

Comments