LocalStack provides an easy-to-use test/mocking framework for developing AWS applications. In this post, I’ll demonstrate how to utilize LocalStack for development using a web service.

Specifically a simple web service built with Flask-RestPlus is used. It supports simple CRUD operations against a database table. It is set that SQS and Lambda are used for creating and updating a record. When a POST or PUT request is made, the service sends a message to a SQS queue and directly returns 204 reponse. Once a message is received, a Lambda function is invoked and a relevant database operation is performed.

The source of this post can be found here.

Web Service

As usual, the GET requests returns all records or a single record when an ID is provided as a path parameter. When an ID is not specified, it’ll create a new record (POST). Otherwise it’ll update an existing record (PUT). Note that both the POST and PUT method send a message and directly returns 204 response - the source can be found here.

1ns = Namespace("records")

2

3@ns.route("/")

4class Records(Resource):

5 parser = ns.parser()

6 parser.add_argument("message", type=str, required=True)

7

8 def get(self):

9 """

10 Get all records

11 """

12 conn = conn_db()

13 cur = conn.cursor(real_dict_cursor=True)

14 cur.execute(

15 """

16 SELECT * FROM records ORDER BY created_on DESC

17 """)

18

19 records = cur.fetchall()

20 return jsonify(records)

21

22 @ns.expect(parser)

23 def post(self):

24 """

25 Create a record via queue

26 """

27 try:

28 body = {

29 "id": None,

30 "message": self.parser.parse_args()["message"]

31 }

32 send_message(flask.current_app.config["QUEUE_NAME"], json.dumps(body))

33 return "", 204

34 except Exception as e:

35 return "", 500

36

37

38@ns.route("/<string:id>")

39class Record(Resource):

40 parser = ns.parser()

41 parser.add_argument("message", type=str, required=True)

42

43 def get(self, id):

44 """

45 Get a record given id

46 """

47 record = Record.get_record(id)

48 if record is None:

49 return {"message": "No record"}, 404

50 return jsonify(record)

51

52 @ns.expect(parser)

53 def put(self, id):

54 """

55 Update a record via queue

56 """

57 record = Record.get_record(id)

58 if record is None:

59 return {"message": "No record"}, 404

60

61 try:

62 message = {

63 "id": record["id"],

64 "message": self.parser.parse_args()["message"]

65 }

66 send_message(flask.current_app.config["QUEUE_NAME"], json.dumps(message))

67 return "", 204

68 except Exception as e:

69 return "", 500

70

71 @staticmethod

72 def get_record(id):

73 conn = conn_db()

74 cur = conn.cursor(real_dict_cursor=True)

75 cur.execute(

76 """

77 SELECT * FROM records WHERE id = %(id)s

78 """, {"id": id})

79

80 return cur.fetchone()

Lambda

The SQS queue that messages are sent by the web service is an event source of the following lambda function. It polls the queue and processes messages as shown below.

1import os

2import logging

3import json

4import psycopg2

5

6logger = logging.getLogger()

7logger.setLevel(logging.INFO)

8

9try:

10 conn = psycopg2.connect(os.environ["DB_CONNECT"], connect_timeout=5)

11except psycopg2.Error as e:

12 logger.error(e)

13 sys.exit()

14

15logger.info("SUCCESS: Connection to DB")

16

17def lambda_handler(event, context):

18 for r in event["Records"]:

19 body = json.loads(r["body"])

20 logger.info("Body: {0}".format(body))

21 with conn.cursor() as cur:

22 if body["id"] is None:

23 cur.execute(

24 """

25 INSERT INTO records (message) VALUES (%(message)s)

26 """, {k:v for k,v in body.items() if v is not None})

27 else:

28 cur.execute(

29 """

30 UPDATE records

31 SET message = %(message)s

32 WHERE id = %(id)s

33 """, body)

34 conn.commit()

35

36 logger.info("SUCCESS: Processing {0} records".format(len(event["Records"])))

Database

As RDS is not yet supported by LocalStack, a postgres db is created with Docker. The web service will do CRUD operations against the table named as records. The initialization SQL script is shown below.

1CREATE DATABASE testdb;

2\connect testdb;

3

4CREATE SCHEMA testschema;

5GRANT ALL ON SCHEMA testschema TO testuser;

6

7-- change search_path on a connection-level

8SET search_path TO testschema;

9

10-- change search_path on a database-level

11ALTER database "testdb" SET search_path TO testschema;

12

13CREATE TABLE testschema.records (

14 id serial NOT NULL,

15 message varchar(30) NOT NULL,

16 created_on timestamptz NOT NULL DEFAULT now(),

17 CONSTRAINT records_pkey PRIMARY KEY (id)

18);

19

20INSERT INTO testschema.records (message)

21VALUES ('foo'), ('bar'), ('baz');

Launch Services

Below shows the docker-compose file that creates local AWS services and postgres database.

1version: '3.7'

2services:

3 localstack:

4 image: localstack/localstack

5 ports:

6 - '4563-4584:4563-4584'

7 - '8080:8080'

8 privileged: true

9 environment:

10 - SERVICES=s3,sqs,lambda

11 - DEBUG=1

12 - DATA_DIR=/tmp/localstack/data

13 - DEFAULT_REGION=ap-southeast-2

14 - LAMBDA_EXECUTOR=docker-reuse

15 - LAMBDA_REMOTE_DOCKER=false

16 - LAMBDA_DOCKER_NETWORK=play-localstack_default

17 - AWS_ACCESS_KEY_ID=foobar

18 - AWS_SECRET_ACCESS_KEY=foobar

19 - AWS_DEFAULT_REGION=ap-southeast-2

20 - DB_CONNECT='postgresql://testuser:testpass@postgres:5432/testdb'

21 - TEST_QUEUE=test-queue

22 - TEST_LAMBDA=test-lambda

23 volumes:

24 - ./init/create-resources.sh:/docker-entrypoint-initaws.d/create-resources.sh

25 - ./init/lambda_package:/tmp/lambda_package

26 # - './.localstack:/tmp/localstack'

27 - '/var/run/docker.sock:/var/run/docker.sock'

28 postgres:

29 image: postgres

30 ports:

31 - 5432:5432

32 volumes:

33 - ./init/db:/docker-entrypoint-initdb.d

34 depends_on:

35 - localstack

36 environment:

37 - POSTGRES_USER=testuser

38 - POSTGRES_PASSWORD=testpass

For LocalStack, it’s easier to illustrate by the environment variables.

- SERVICES - S3, SQS and Lambda services are selected

- DEFAULT_REGION - Local AWS resources will be created in ap-southeast-2 by default

- LAMBDA_EXECUTOR - By selecting docker-reuse, Lambda function will be invoked by another container (based on lambci/lambda image). Once a container is created, it’ll be reused. Note that, in order to invoke a Lambda function in a separate Docker container, it should run in privileged mode (privileged: true)

- LAMBDA_REMOTE_DOCKER - It is set to false so that a Lambda function package can be added from a local path instead of a zip file.

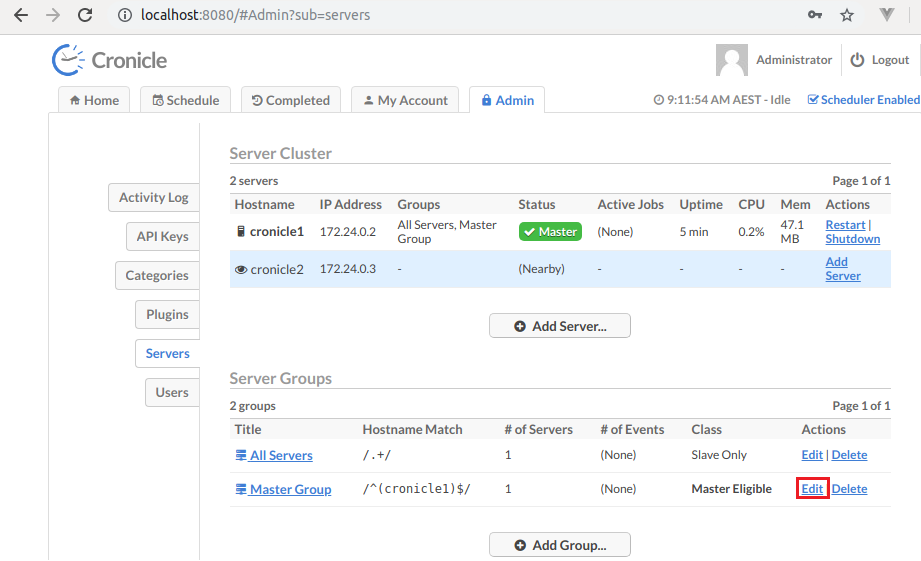

- LAMBDA_DOCKER_NETWORK - Although the Lambda function is invoked in a separate container, it should be able to discover the database service (postgres). By default, Docker Compose creates a network (

<parent-folder>_default) and, specifying the network name, the Lambda function can connect to the database with the DNS set by DB_CONNECT

Actual AWS resources is created by create-resources.sh, which will be executed at startup. A SQS queue and Lambda function are created and the queue is mapped to be an event source of the Lambda function.

1#!/bin/bash

2

3echo "Creating $TEST_QUEUE and $TEST_LAMBDA"

4

5aws --endpoint-url=http://localhost:4576 sqs create-queue \

6 --queue-name $TEST_QUEUE

7

8aws --endpoint-url=http://localhost:4574 lambda create-function \

9 --function-name $TEST_LAMBDA \

10 --code S3Bucket="__local__",S3Key="/tmp/lambda_package" \

11 --runtime python3.6 \

12 --environment Variables="{DB_CONNECT=$DB_CONNECT}" \

13 --role arn:aws:lambda:ap-southeast-2:000000000000:function:$TEST_LAMBDA \

14 --handler lambda_function.lambda_handler \

15

16aws --endpoint-url=http://localhost:4574 lambda create-event-source-mapping \

17 --function-name $TEST_LAMBDA \

18 --event-source-arn arn:aws:sqs:elasticmq:000000000000:$TEST_QUEUE

The services can be launched as following.

1docker-compose up -d

Test Web Service

Before testing the web service, it can be shown how the SQS and Lambda work by sending a message as following.

1aws --endpoint-url http://localhost:4576 sqs send-message \

2 --queue-url http://localhost:4576/queue/test-queue \

3 --message-body '{"id": null, "message": "test"}'

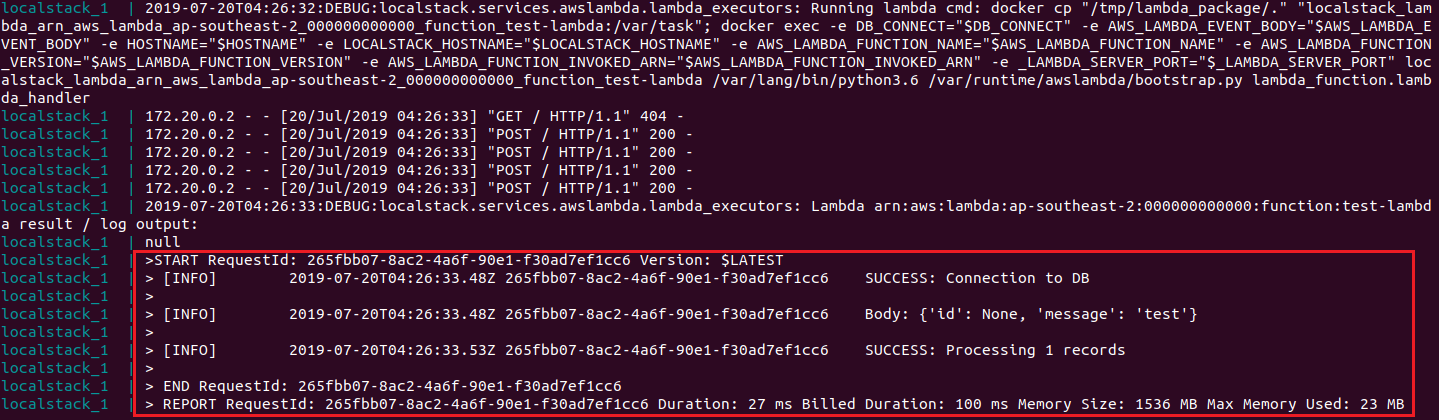

As shown in the image below, LocalStack invokes the Lambda function in a separate Docker container.

The web service can be started as following.

1FLASK_APP=api FLASK_ENV=development flask run

Using HttPie, the record created just before can be checked as following.

1http http://localhost:5000/api/records/4

1{

2 "created_on": "2019-07-20T04:26:33.048841+00:00",

3 "id": 4,

4 "message": "test"

5}

For updating it,

1echo '{"message": "test put"}' | \

2 http PUT http://localhost:5000/api/records/4

3

4http http://localhost:5000/api/records/4

1{

2 "created_on": "2019-07-20T04:26:33.048841+00:00",

3 "id": 4,

4 "message": "test put"

5}

Comments