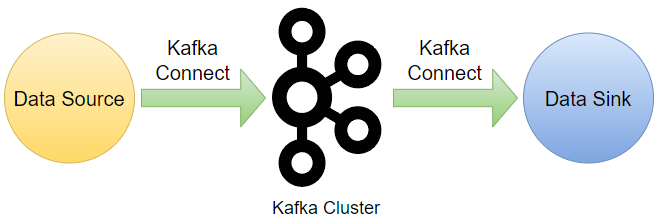

Amazon Kinesis Data Streams and Amazon Managed Streaming for Apache Kafka (MSK) are two managed streaming services offered by AWS. Many resources on the web indicate Kinesis Data Streams is better when it comes to integrating with AWS services. However, it is not necessarily the case with the help of Kafka Connect. According to the documentation of Apache Kafka, Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other systems. It makes it simple to quickly define connectors that move large collections of data into and out of Kafka. Kafka Connect supports two types of connectors - source and sink. Source connectors are used to ingest messages from external systems into Kafka topics while messages are ingested into external systems form Kafka topics with sink connectors. In this post, I will introduce available Kafka connectors mainly for AWS services integration. Also, developing and deploying some of them will be covered in later posts.

- Part 1 Introduction (this post)

- Part 2 Develop Camel DynamoDB Sink Connector

- Part 3 Deploy Camel DynamoDB Sink Connector

- Part 4 Develop Aiven OpenSearch Sink Connector

- Part 5 Deploy Aiven OpenSearch Sink Connector

Amazon

As far as I’ve searched, there are two GitHub repositories by AWS. The Kinesis Kafka Connector includes sink connectors for Kinesis Data Streams and Kinesis Data Firehose. Also, recently AWS released a Kafka connector for Amazon Personalize and the project repository can be found here. The available connectors are summarised below.

| Service | Source | Sink |

|---|---|---|

| Kinesis | ✔ | |

| Kinesis - Firehose | ✔ | |

| Personalize | ✔ | |

| EventBridge | ✔ |

Note that, if we use the sink connector for Kinesis Data Firehose, we can build data pipelines to the AWS services that are supported by it, which covers S3, Redshift and OpenSearch mainly.

There is one more source connector by AWS Labs to be noted, although it doesn’t support integration with a specific AWS service. The Amazon MSK Data Generator is a translation of the Voluble connector, and it can be used to generate test or fake data and ingest into a Kafka topic.

Confluent Hub

Confluent is a leading provider of Apache Kafka and related services. It manages the Confluent Hub where we can discover (and/or submit) Kafka connectors for various integration use cases. Although it keeps a wide range of connectors, only less than 10 connectors can be used to integrate with AWS services reliably at the time of writing this post. Moreover, all of them except for the S3 sink connector are licensed under Commercial (Standard), which requires purchase of Confluent Platform subscription. Or it can only be used on a single broker cluster or evaluated for 30 days otherwise. Therefore, practically most of them cannot be used unless you have a subscription for the Confluent Platform.

| Service | Source | Sink | Licence |

|---|---|---|---|

| S3 | ✔ | Free | |

| S3 | ✔ | Commercial (Standard) | |

| Redshift | ✔ | Commercial (Standard) | |

| SQS | ✔ | Commercial (Standard) | |

| Kinesis | ✔ | Commercial (Standard) | |

| DynamoDB | ✔ | Commercial (Standard) | |

| CloudWatch Metrics | ✔ | Commercial (Standard) | |

| Lambda | ✔ | Commercial (Standard) | |

| CloudWatch Logs | ✔ | Commercial (Standard) |

Camel Kafka Connector

Apache Camel is a versatile open-source integration framework based on known Enterprise Integration Patterns. It supports Camel Kafka connectors, which allows you to use all Camel components as Kafka Connect connectors. The latest LTS version is 3.18.x (LTS), and it is supported until July 2023. Note that it works with Apache Kafka at version 2.8.0 as a dependency. In spite of the compatibility requirement, the connectors are using the Kafka Client which often is compatible with different broker versions, especially when the two versions are closer. Therefore, we can use them with a different Kafka version e.g. 2.8.1, which is recommended by Amazon MSK.

At the time of writing this post, there are 23 source and sink connectors that target specific AWS services - see the summary table below. Moreover, there are connectors targeting popular RDBMS, which cover MariaDB, MySQL, Oracle, PostgreSQL and MS SQL Server. Together with the Debezium connectors, we can build effective data pipelines on Amazon RDS as well. Overall Camel connectors can be quite beneficial when building real-time data pipelines on AWS.

Other Providers

Two other vendors (Aiven and Lenses) provide Kafka connectors that target AWS services, and those are related to S3 and OpenSearch - see below. I find the OpenSearch sink connector from Aiven would be worth a close look.

| Service | Source | Sink | Provider |

|---|---|---|---|

| S3 | ✔ | Aiven | |

| OpenSearch | ✔ | Aiven | |

| S3 | ✔ | Lenses | |

| S3 | ✔ | Lenses |

Summary

Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other systems. It can be used to build real-time data pipelines on AWS effectively. We have discussed a range of Kafka connectors both from Amazon and 3rd-party projects. We will showcase some of them in later posts.

Comments