As described in the Confluent document, Schema Registry provides a centralized repository for managing and validating schemas for topic message data, and for serialization and deserialization of the data over the network. Producers and consumers to Kafka topics can use schemas to ensure data consistency and compatibility as schemas evolve. In AWS, the Glue Schema Registry supports features to manage and enforce schemas on data streaming applications using convenient integrations with Apache Kafka, Amazon Managed Streaming for Apache Kafka, Amazon Kinesis Data Streams, Amazon Kinesis Data Analytics for Apache Flink, and AWS Lambda.

In order to integrate the Glue Schema Registry with an application, we need to use the AWS Glue Schema Registry Client library, which primarily provides serializers and deserializers for Avro, Json and Portobuf formats. It also supports other necessary features such as registering schemas and performing compatibility check. As the project doesn’t provide pre-built binaries, we have to build them on our own. In this post, I’ll illustrate how to build the client library after introducing how it works to integrate the Glue Schema Registry with Kafka producer and consumer apps. Once built successfully, we can obtain multiple binaries not only for Kafka Connect but also other applications such as Flink for Kinesis Data Analytics. Therefore, this post can be considered as a stepping stone for later posts.

- Part 1 Cluster Setup

- Part 2 Management App

- Part 3 Kafka Connect

- Part 4 Producer and Consumer

- Part 5 Glue Schema Registry (this post)

- Part 6 Kafka Connect with Glue Schema Registry

- Part 7 Producer and Consumer with Glue Schema Registry

- Part 8 SSL Encryption

- Part 9 SSL Authentication

- Part 10 SASL Authentication

- Part 11 Kafka Authorization

How It Works with Apache Kafka

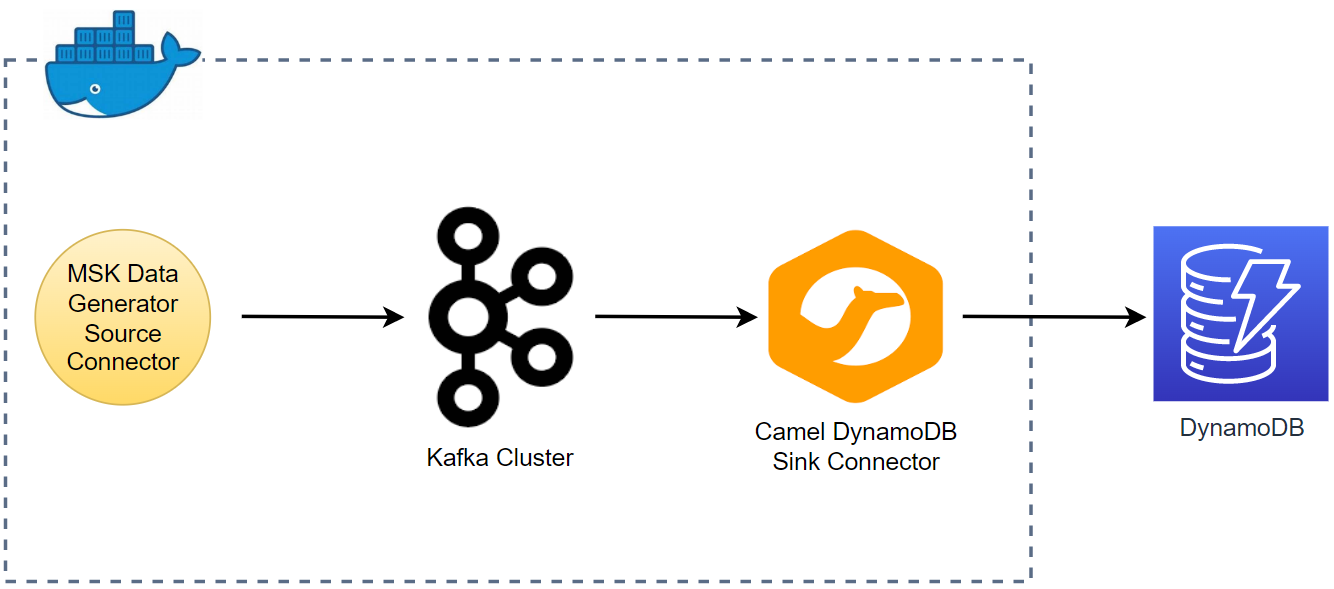

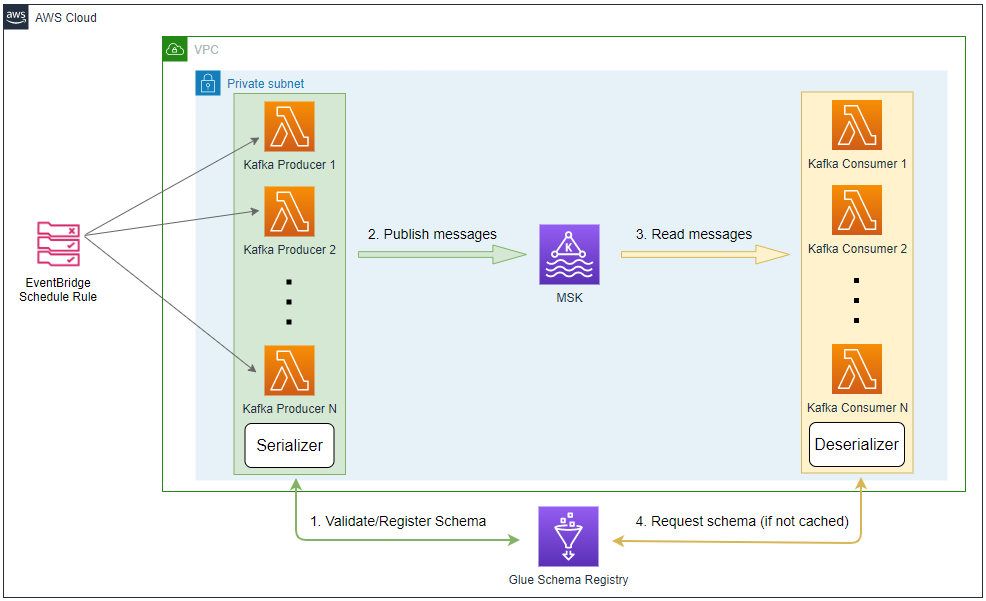

The below diagram shows how Kafka producer and consumer apps are integrated with the Glue Schema Registry. As Kafka producer and consumer apps are decoupled, they operate on Kafka topics rather than communicating with each other directly. Therefore, it is important to have a schema registry that manages/stores schemas and validates them.

- The producer checks whether the schema that is used for serializing records is valid. Also, a new schema version is registered if it is yet to be done so.

- Note the schema registry preforms compatibility checks while registering a new schema version. If it turns out to be incompatible, registration fails and the producer fails to send messages.

- The producer serializes and compresses messages and sends them to the Kafka cluster.

- The consumer reads the serialized and compressed messages.

- The consumer retrieves the schema from the schema registry (if it is yet to be cached) and uses it to decompress and deserialize messages.

Glue Schema Registry Client Library

As mentioned earlier, the Glue Schema Registry Client library primarily provides serializers and deserializers for Avro, Json and Portobuf formats. It also supports other necessary features such as registering schemas and performing compatibility check. Below lists the main features of the library.

- Messages/records are serialized on producer front and deserialized on the consumer front by using schema-registry-serde.

- Support for three data formats: AVRO, JSON (with JSON Schema Draft04, Draft06, Draft07), and Protocol Buffers (Protobuf syntax versions 2 and 3).

- Kafka Streams support for AWS Glue Schema Registry.

- Records can be compressed to reduce message size.

- An inbuilt local in-memory cache to save calls to AWS Glue Schema Registry. The schema version id for a schema definition is cached on Producer side and schema for a schema version id is cached on the Consumer side.

- Auto registration of schema can be enabled for any new schema to be auto-registered.

- For Schemas, Evolution check is performed while registering.

- Migration from a third party Schema Registry.

- Flink support for AWS Glue Schema Registry.

- Kafka Connect support for AWS Glue Schema Registry.

It can work with Apache Kafka as well as other AWS services. See this AWS documentation for its integration use cases listed below.

- Connecting Schema Registry to Amazon MSK or Apache Kafka

- Integrating Amazon Kinesis Data Streams with the AWS Glue Schema Registry

- Amazon Kinesis Data Analytics for Apache Flink

- Integration with AWS Lambda

- AWS Glue Data Catalog

- AWS Glue streaming

- Apache Kafka Streams

- Apache Kafka Connect

Build Glue Schema Registry Client Library

In order to build the client library, we need to have both the JDK and Maven installed. I use Ubuntu 18.04 on WSL2 and both the apps are downloaded from the Ubuntu package manager.

1$ sudo apt update && sudo apt install -y openjdk-11-jdk maven

2...

3$ mvn --version

4Apache Maven 3.6.3

5Maven home: /usr/share/maven

6Java version: 11.0.19, vendor: Ubuntu, runtime: /usr/lib/jvm/java-11-openjdk-amd64

7Default locale: en, platform encoding: UTF-8

8OS name: "linux", version: "5.4.72-microsoft-standard-wsl2", arch: "amd64", family: "unix"

We first need to download the source archive from the project repository. The latest version is v.1.1.15 at the time of writing this post, and it can be downloaded using curl with -L flag in order to follow the redirected download URL. Once downloaded, we can build the binaries as indicated in the project repository. The script shown below downloads and builds the client library. It can also be found in the GitHub repository of this post.

1# kafka-dev-with-docker/part-05/build.sh

2#!/usr/bin/env bash

3SCRIPT_DIR="$(cd $(dirname "$0"); pwd)"

4

5SRC_PATH=${SCRIPT_DIR}/plugins

6rm -rf ${SRC_PATH} && mkdir ${SRC_PATH}

7

8## Dwonload and build glue schema registry

9echo "downloading glue schema registry..."

10VERSION=v.1.1.15

11DOWNLOAD_URL=https://github.com/awslabs/aws-glue-schema-registry/archive/refs/tags/$VERSION.zip

12SOURCE_NAME=aws-glue-schema-registry-$VERSION

13

14curl -L -o ${SRC_PATH}/$SOURCE_NAME.zip ${DOWNLOAD_URL} \

15 && unzip -qq ${SRC_PATH}/$SOURCE_NAME.zip -d ${SRC_PATH} \

16 && rm ${SRC_PATH}/$SOURCE_NAME.zip

17

18echo "building glue schema registry..."

19cd plugins/$SOURCE_NAME/build-tools \

20 && mvn clean install -DskipTests -Dcheckstyle.skip \

21 && cd .. \

22 && mvn clean install -DskipTests \

23 && mvn dependency:copy-dependencies

Note that I skipped tests with the -DskipTests option in order to save build time. Note further that I also skipped checkstyle execution with the -Dcheckstyle.skip option as I encountered the following error.

1[ERROR] Failed to execute goal org.apache.maven.plugins:maven-checkstyle-plugin:3.1.2:check (default) on project schema-registry-build-tools: Failed during checkstyle execution: Unable to find suppressions file at location: /tmp/kafka-pocs/kafka-dev-with-docker/part-05/plugins/aws-glue-schema-registry-v.1.1.15/build-tools/build-tools/src/main/resources/suppressions.xml: Could not find resource '/tmp/kafka-pocs/kafka-dev-with-docker/part-05/plugins/aws-glue-schema-registry-v.1.1.15/build-tools/build-tools/src/main/resources/suppressions.xml'. -> [Help 1]

Once it was built successfully, I was able to see the following messages.

1[INFO] ------------------------------------------------------------------------

2[INFO] Reactor Summary for AWS Glue Schema Registry Library 1.1.15:

3[INFO]

4[INFO] AWS Glue Schema Registry Library ................... SUCCESS [ 0.644 s]

5[INFO] AWS Glue Schema Registry Build Tools ............... SUCCESS [ 0.038 s]

6[INFO] AWS Glue Schema Registry common .................... SUCCESS [ 0.432 s]

7[INFO] AWS Glue Schema Registry Serializer Deserializer ... SUCCESS [ 0.689 s]

8[INFO] AWS Glue Schema Registry Serializer Deserializer with MSK IAM Authentication client SUCCESS [ 0.216 s]

9[INFO] AWS Glue Schema Registry Kafka Streams SerDe ....... SUCCESS [ 0.173 s]

10[INFO] AWS Glue Schema Registry Kafka Connect AVRO Converter SUCCESS [ 0.190 s]

11[INFO] AWS Glue Schema Registry Flink Avro Serialization Deserialization Schema SUCCESS [ 0.541 s]

12[INFO] AWS Glue Schema Registry examples .................. SUCCESS [ 0.211 s]

13[INFO] AWS Glue Schema Registry Integration Tests ......... SUCCESS [ 0.648 s]

14[INFO] AWS Glue Schema Registry Kafka Connect JSONSchema Converter SUCCESS [ 0.239 s]

15[INFO] AWS Glue Schema Registry Kafka Connect Converter for Protobuf SUCCESS [ 0.296 s]

16[INFO] ------------------------------------------------------------------------

17[INFO] BUILD SUCCESS

18[INFO] ------------------------------------------------------------------------

19[INFO] Total time: 5.287 s

20[INFO] Finished at: 2023-05-19T08:08:51+10:00

21[INFO] ------------------------------------------------------------------------

As can be checked in the build messages, we can obtain binaries not only for Kafka Connect but also other applications such as Flink for Kinesis Data Analytics. Below shows all the available binaries.

1## kafka connect

2plugins/aws-glue-schema-registry-v.1.1.15/avro-kafkaconnect-converter/target/

3├...

4├── schema-registry-kafkaconnect-converter-1.1.15.jar

5plugins/aws-glue-schema-registry-v.1.1.15/jsonschema-kafkaconnect-converter/target/

6├...

7├── jsonschema-kafkaconnect-converter-1.1.15.jar

8plugins/aws-glue-schema-registry-v.1.1.15/protobuf-kafkaconnect-converter/target/

9├...

10├── protobuf-kafkaconnect-converter-1.1.15.jar

11## flink

12plugins/aws-glue-schema-registry-v.1.1.15/avro-flink-serde/target/

13├...

14├── schema-registry-flink-serde-1.1.15.jar

15## kafka streams

16plugins/aws-glue-schema-registry-v.1.1.15/kafkastreams-serde/target/

17├...

18├── schema-registry-kafkastreams-serde-1.1.15.jar

19## serializer/descrializer

20plugins/aws-glue-schema-registry-v.1.1.15/serializer-deserializer/target/

21├...

22├── schema-registry-serde-1.1.15.jar

23plugins/aws-glue-schema-registry-v.1.1.15/serializer-deserializer-msk-iam/target/

24├...

25├── schema-registry-serde-msk-iam-1.1.15.jar

Summary

The Glue Schema Registry supports features to manage and enforce schemas on data streaming applications using convenient integrations with Apache Kafka and other AWS managed services. In order to utilise those features, we need to use the client library. In this post, I illustrated how to build the client library after introducing how it works to integrate the Glue Schema Registry with Kafka producer and consumer apps.

Comments