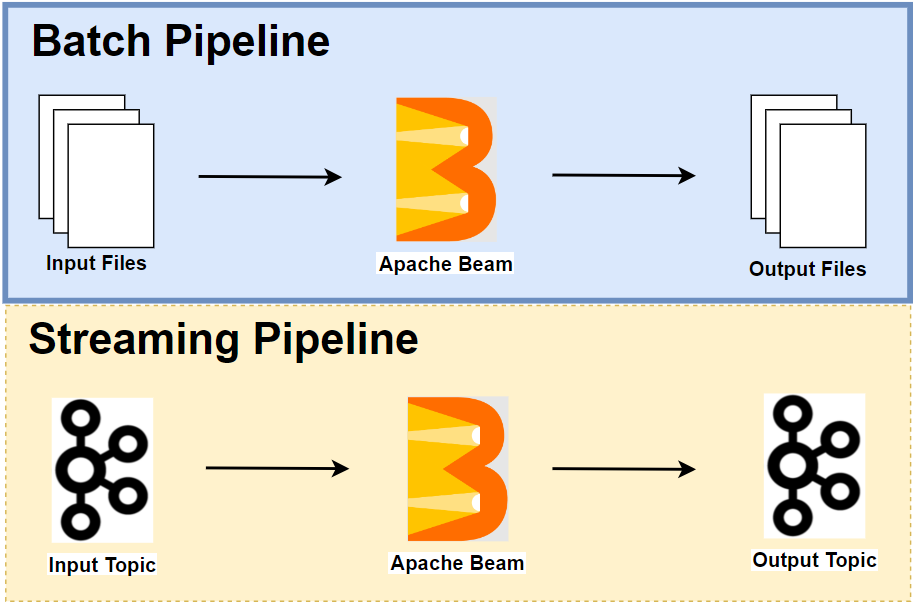

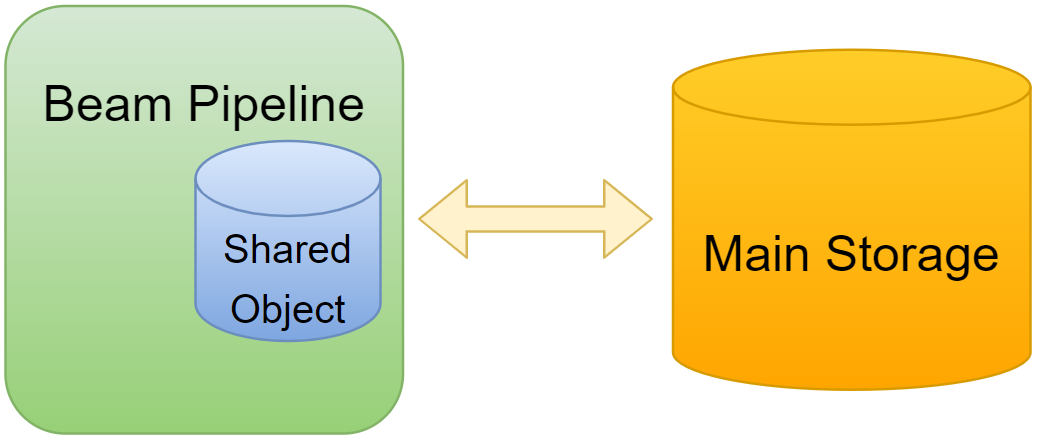

I recently contributed to Apache Beam by adding a common pipeline pattern - Cache data using a shared object. Both batch and streaming pipelines are introduced, and they utilise the Shared class of the Python SDK to enrich PCollection elements. This pattern can be more memory-efficient than side inputs, simpler than a stateful DoFn, and more performant than calling an external service, because it does not have to access an external service for every element or bundle of elements. In this post, we discuss this pattern in more details with batch and streaming use cases. For the latter, we configure the cache gets refreshed periodically.