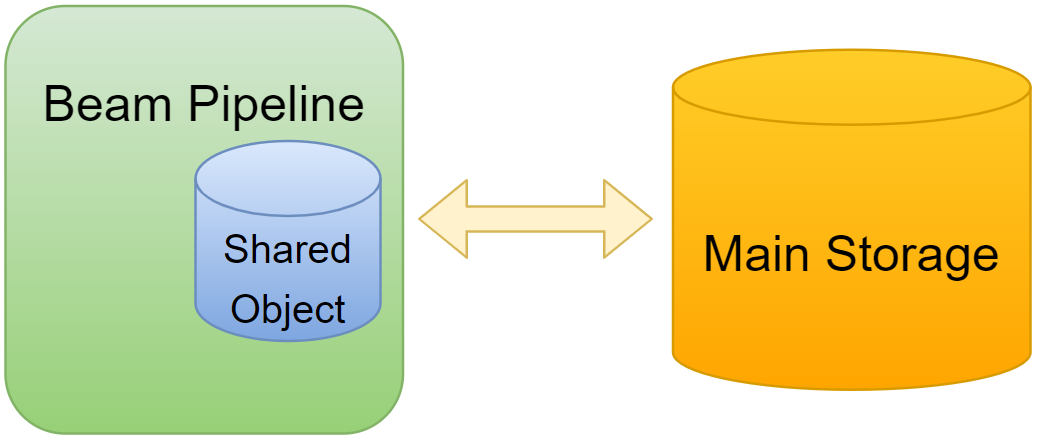

In the previous post, we continued discussing an Apache Beam pipeline that arguments input data by calling a Remote Procedure Call (RPC) service. A pipeline was developed that makes a single RPC call for a bundle of elements. The bundle size is determined by the runner, however, we may encounter an issue e.g. if an RPC service becomes quite slower if many elements are included in a single request. We can improve the pipeline using stateful DoFn where the number elements to process and maximum wait seconds can be controlled by state and timers. Note that, although the stateful DoFn used in this post solves the data augmentation task well, in practice, we should use the built-in transforms such as BatchElements and GroupIntoBatches whenever possible.