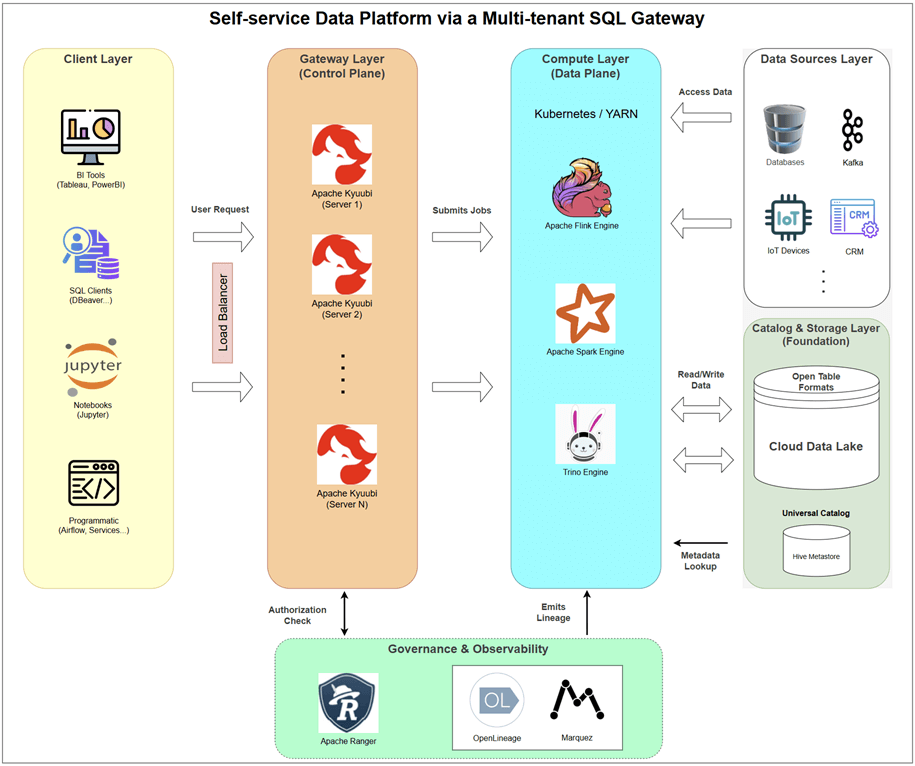

Providing direct access to big data engines like Spark and Flink often creates chaos. A gateway-centric architecture solves this by introducing a robust control plane. This article presents a detailed blueprint using Apache Kyuubi, a multi-tenant SQL gateway, to provision and manage on-demand Spark, Flink, and Trino engines. Learn how this model delivers true self-service analytics with centralized governance, finally resolving the conflict between user empowerment and platform stability.