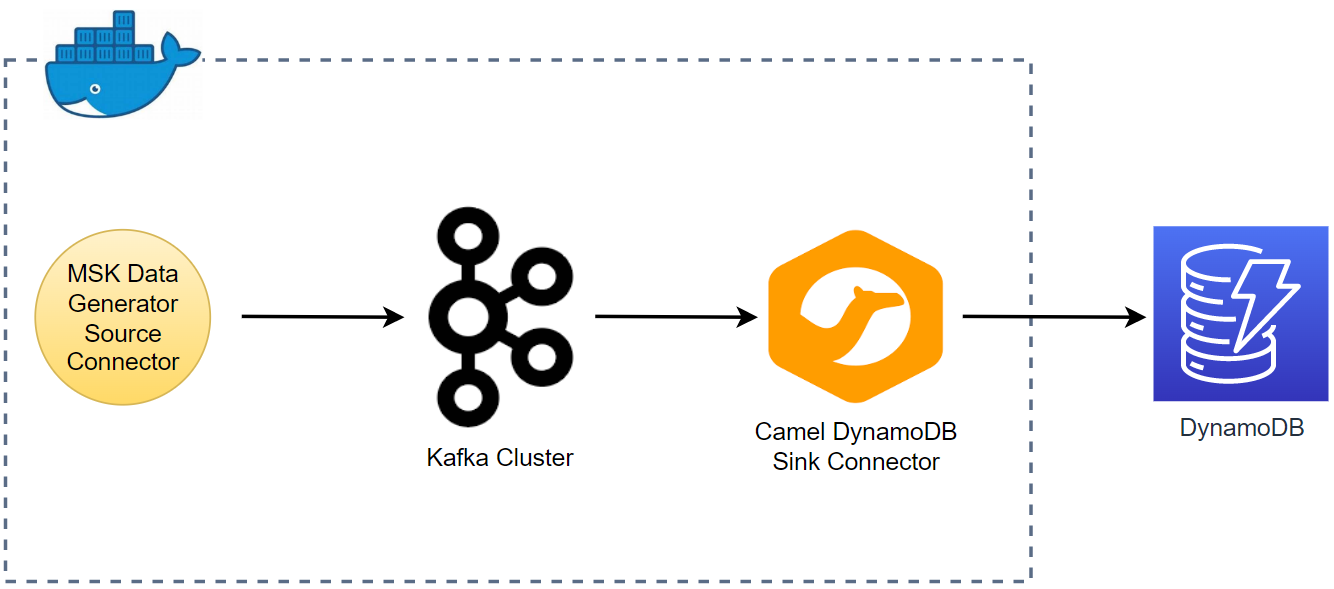

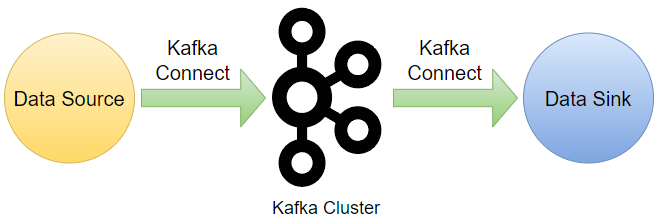

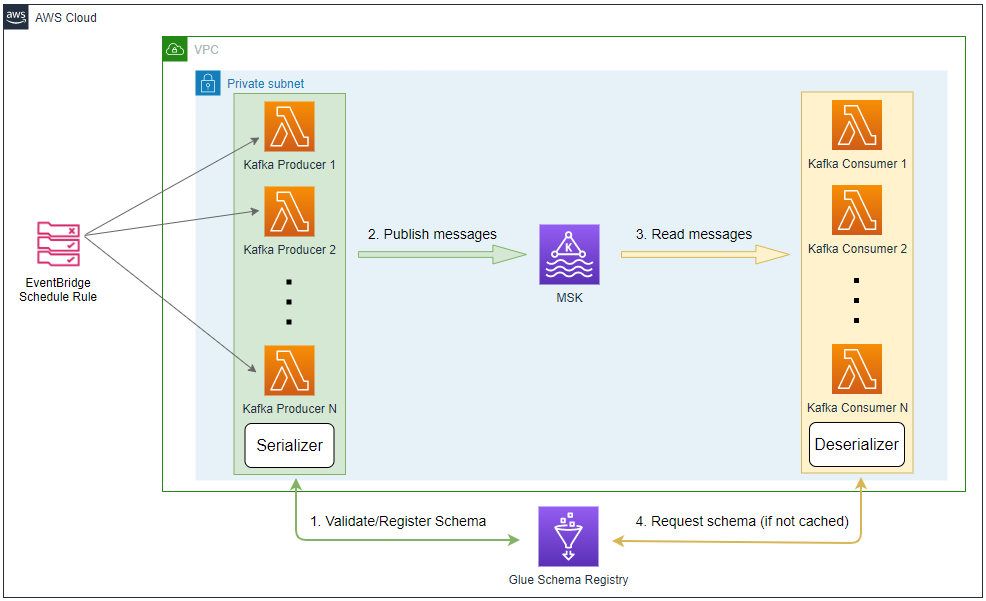

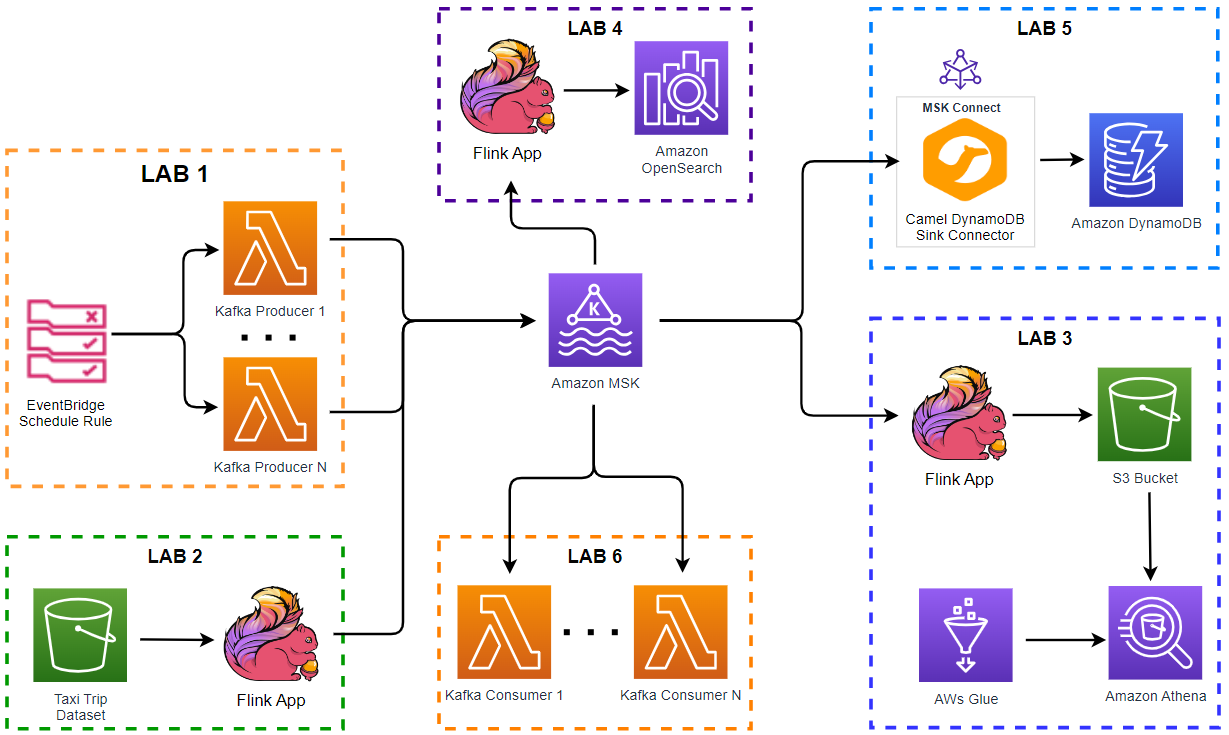

This series updates a real time analytics app based on Amazon Kinesis from an AWS workshop. Data is ingested from multiple sources into a Kafka cluster instead and Flink (Pyflink) apps are used extensively for data ingesting and processing. As an introduction, this post compares the original architecture with the new architecture, and the app will be implemented in subsequent posts.