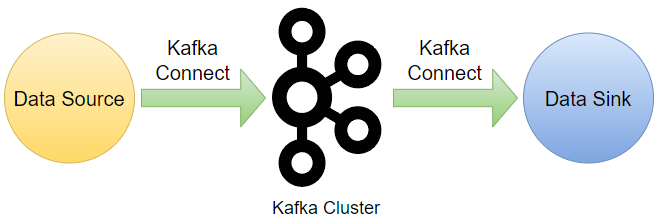

Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other systems. It can be used to build real-time data pipeline on AWS effectively. In this post, I will introduce available Kafka connectors mainly for AWS services integration. Also, developing and deploying some of them will be covered in later posts.